AFFECTIVE COMPUTING

Mapping a model to detect and analyse gesture

To be able to detect emotion from gesture, the user's movement has to be analysed and match to a common set of data in the database. These models can be mapped in both 3D and 2D depending on the level of detail the system demands

3D model-based mapping

Volumetric-based algorithm

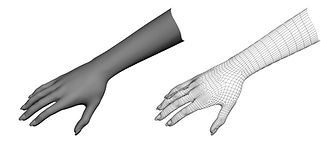

This algorithm has the highest level of precision and is commonly used in graphics and animation areas. The surface of the subject is mapped onto a 3D-mesh model (see Figure 1). However due to the detailed results the method is very computationally intensive and is not yet practical for live gesture analysis.

Figure 1 : 3D mesh model mapping

Figure 2: replacing main body parts

with simple objects

In Figure 2, simple objects like cylinders and spheres are used to approximate

body parts. While dropping fine details of the model and focusing more on

important parts of the body, the time and intensity of processing is reduced.

Skeletal-based algorithm

A less intensive method is to simplify joints and angle of

body parts into an uncomplicated model. Most analysis is done

on the orientation and location of each part. Here we only

compute key parameters so the whole analysis is faster than

volumetric and matching with template from database can

be done more easily.

Figure 3: skelatal-based mapping

Simple markers mapping

Another practical method is to use 'markers' attached to different body parts of the subject and cameras to capture these points and map them onto a 3D- space. The path of each points can then be recorded over a period of time. This ignores most of the details and only focus on main joints of the body. This is also known as the VICON motion capture system.

Figure 4: VICON motion capture system

2D view-based mapping

This method extracts input from the camera(s) and outline them

into a 2D figure. This is mostly used in multimodal emotion

recognition to minimise the processing time and intensity so

other methods regarding facial expression and speech can be carry

out. The subject is observed in environment that their body

parts stand out e.g. bright-coloured rooms and wearing bright

coloured shirts.

Figure 5: 2D based model

Matching with Database

Subjects can enact basic emotions to be recorded in order to construct the templates ot database for comparison and detection. In order to get expressive gestures professional dancers are used in some experiments. The features extracted from the movements are mainly the cartesian coordinates of focus points in (x,y,z) for 3D space and (x,y) for 2D, the velocity of the points, and the acceleration. The common features each emotion share can then be stored as the base data collection. The detection system can be implemented in a way that it can also improve database upon machine learning.